Do I need to update video card drivers when Mining?

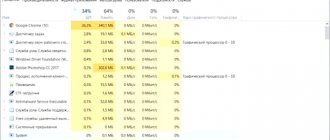

But if you have assembled a rig for cryptocurrency mining, then installing the driver is vital, otherwise the equipment will freeze. To achieve high performance when mining cryptocurrencies, it is necessary to use the most powerful drivers, as well as correctly configure the operating system.

Interesting materials:

How often should strawberries be watered? How often should you practice parallel bars? How often should you use the rowing machine? How often is the data in the Pension Fund updated? How often to spray tomatoes? How often is the composition of the consumer basket reviewed? How often are the composition of the consumer basket reviewed? How often to water eggplants? How often to water cabbage in September? How often to water raspberries after planting in the spring?

Logging into the driver control panel

There are several ways to open the red GPU configuration tool:

- The easiest one is to use the “Desktop” context menu: right-click on it (RMB) and select “Radeon Settings” / “AMD Catalyst Control Center”.

- The next method is the Start menu: open it, then find the folder with the desired application and call it.

- You can also use the system tray, a special area in the lower right corner of the screen where open background applications are located. Typically, the graphics card control panel icon looks like this:

To open the main settings menu, click on it either once with the right mouse button or twice with the left mouse button.

- The next option is the system “Search”. In Windows 10, you need to click on a button or line on the taskbar and enter the query radeon or amd there, press Enter and use the relevant result.

- The last method, which should be used as a last resort, is to search and launch from an executable file. To solve this problem, open the Explorer window, left-click on the address bar, paste one of the addresses below into it and click the transition element:

- C:\Program Files\AMD\CNext\CCCSlim and C:\Program Files (x86)\AMD\CNext\CCCSlim – AMD Catalyst Control Center;

C:\Program Files\AMD\CNext\CNext – Radeon Settings.

Next, find the EXE file named CCC.exe or RadeonSettings.exe and run it to open the required snap-in.

What to do if Radeon Settings won't open

If none of the above steps solved the problem, we offer you methods to resolve this problem. The first step is to check whether the drivers for the video card are installed at all - this is often the reason. Open the “Device Manager” using the “Run” snap-in already mentioned above, only this time the required command looks like devmgmt.msc.

Next, expand the “Video Adapters” category and check exactly how your AMD device is designated. If it says "Microsoft Basic Video Driver," it means the GPU software is not installed.

Read more: Example of installing drivers for an AMD video card

If the video card is displayed as expected, but there is a yellow error triangle on the icon, right-click on it and select “Properties”.

Check the “Status” block - it indicates the immediate error and its designation code. Codes 2 and 10 indicate problems with drivers, and in this case you should either install the necessary software or reinstall it completely.

Read more: Reinstalling video card drivers

If the error code is indicated as 43, this means that the device is faulty, and all attempts to install drivers will lead to nothing.

Read more: What to do with error 43 on a video card

Also, software reasons cannot be ruled out - for example, the activity of virus software that damaged the driver. Of course, the problem can be eliminated by uninstalling and re-installing, but it is advisable to remove its root cause.

Read more: Fighting computer viruses

We are glad that we were able to help you solve the problem. In addition to this article, there are 12,578 more instructions on the site. Add the Lumpics.ru website to your bookmarks (CTRL+D) and we will definitely be useful to you. Thank the author and share the article on social networks.

Describe what didn't work for you. Our specialists will try to answer as quickly as possible.

Useful features

It remains only to mention some functions that may be useful in everyday life. CALresult result; // Obtaining the status of an individual device CALdevicestatus status; result = calDeviceGetStatus( &status, device ); // Force termination of all tasks running on the GPU result = calCtxFlush( context ); // Obtaining information about the function from the kernel (after compilation) CALfunc function; CALfuncInfo functionInfo; result = calModuleGetFuncInfo( &functionInfo, context, module, function ); /* While this function looks nice, it didn't work in my case (I was trying to find out the maximum number of threads the kernel can run with) */ // Get a description of the last error that occurred from aticalrt.dll const char* errorString = calGetErrorString (); // Get a description of the last error that occurred from the aticalcl.dll library (compiler) const char* errorString = calclGetErrorString();

Compiling and loading the kernel on the GPU

“The death of Koshchei in a needle, a needle in an egg, an egg in a duck, a duck in a hare, a hare in a chest...”

The process of loading the kernel onto the GPU can be described as follows: the source code (txt) is compiled into an object file, then one or more object files are linked into an image, which is then loaded into the GPU module (module), and from the module you can get a pointer to the kernel entry point (using this pointer we can launch the kernel for execution).

And now how this is implemented:

const char* kernel;

// the kernel source text should be here // Find out which GPU to compile for unsigned int deviceId = 0; // GPU identifier CALdeviceinfo deviceInfo; CALresult result = calDeviceGetInfo( &deviceInfo, deviceId ); // Compile the source CALobject obj; result = calclCompile( &obj, CAL_LANGUAGE_IL, kernel, deviceInfo.target ); // Link the object into the image CALimage image; result = calclLink( &image, &obj, 1 ); // the second parameter is the list of object holders, the third is the number of object holders // We no longer need the object holder, we free it result = calclFreeObject( obj ); // Load the image into the module CALmodule module; result = calModuleLoad( &module, context, image ); // Get the entry point to the kernel CALfunc function; result = calModuleGetEntry( &function, context, module, "main" ); Rule No. 9:

there is always one entry point into the kernel, since there is only one function after linking - the “main” function.

That is, unlike Nvidia CUDA, the AMD CAL kernel can only have one global function “main”.

As you may have noticed, the compiler can only process source code written in IL.

Loading the image into the module is explained by the fact that the image needs to be loaded into a dedicated GPU context. Therefore, the described compilation process must be done for each GPU (except for the case when there are 2 identical GPUs: it is enough to compile and link once, but you still have to load the image into the module for each card).

I would like to draw your attention to the possibility of linking several objects. Maybe someone will find this feature useful. In my opinion, it can be applied in the case of different implementations of the same subfunction: these implementations can be moved to different object codes, since AMD IL does not have preprocessor directives like #ifdef.

After the kernel execution on the GPU is completed, the corresponding resources will need to be released:

CALresult result = calclFreeImage( image ); result = calModuleUnload( context, module );

Driver initialization

Rule No. 1:

before starting to work with the GPU, wash your hands and initialize the driver with the following call: CALresult result = calInit();

Rule No. 2:

after working with the GPU, do not forget to clean up after yourself and shut down the work correctly.

This is done by calling: CALresult result = calShutdown(); These two calls should always be paired. There may be several of them (such pairs of calls) in a program, but never work with the GPU outside of these calls: such behavior may result in a hardware exception

.

Cross-thread synchronization

Unlike Nvidia CUDA, you don't need to do any additional context work if you're accessing the GPU from different threads.

But there are still some restrictions. Rule #12:

All CAL compiler functions

are not

thread safe. Within one application, only one thread can work with the compiler at a time.

Rule #13:

All core CAL functions that operate on a specific context/device

are

thread safe.

All other functions are not

thread safe.

Rule #14:

Only one application thread at a time can work with a particular context.

Memory copy

Obtaining direct memory access

If the GPU supports mapping

its memory (mapping its memory addresses into the process address space), then we can get a pointer to this memory, and then work with it like with any other memory: unsigned int pitch;

unsigned char* mappedPointer; CALresult result = calResMap( (CALvoid**)&mappedPointer, &pitch, resource, 0 ); // the last parameter is flags, but according to the documentation, they are not used. And after we finish working with memory, we need to free the pointer: CALresult result = calResUnmap( resource ); Rule #7:

alignment

when working with GPU memory .

This alignment is characterized by the pitch

.

Rule #8:

pitch is measured in

elements

, not bytes.

Why do you need to know about this alignment? The point is that, unlike RAM, GPU memory is not always a contiguous area. This is especially true when working with textures. Let me explain this with an example: if you want to work with a texture of 100x100 elements, and the calResMap() function returned a pitch value of 200, then this means that in fact the GPU will work with a texture of 200x100, it’s just that only the first 100 will be taken into account in each line of the texture elements.

Copying to GPU memory, taking into account the pitch value, can be organized as follows:

unsigned int pitch; unsigned char* mappedPointer; unsigned char* dataBuffer; CALresult result = calResMap( (CALvoid**)&mappedPointer, &pitch, resource, 0 ); unsigned int width; unsigned int height; unsigned int elementSize = 16; if( pitch > width ) { for( uint index = 0; index < height; ++index ) { memcpy( mappedPointer + index * pitch * elementSize, dataBuffer + index * width * elementSize, width * elementSize ); } } else { memcpy( mappedPointer, dataBuffer, width * height * elementSize ); } Naturally, the data in the dataBuffer must be prepared taking into account the element type. But remember that the element is always 16 bytes in size. That is, for an element of the CAL_FORMAT_UNSIGNED_INT16_2 format, its byte-by-byte representation in memory will be as follows: // w - word, 16 bits // wi.j - i-th word, j-th byte // x - garbage [ w0.0 | w0.1 | x | x ][ w1.0 | w1.1 | x | x ][ x | x | x | x ][ x | x | x | x ]

Copying data between resources

Data is copied not directly between resources, but between their values mapped to the context. The copy operation is asynchronous, so to know when the copy operation has completed, a system object of type CALevent is used: CALresource inputResource; CALresource outputResource; CALmem inputResourceMem; CALmem outputResourceMem; // Map resources to context CALresult result = calCtxGetMem( &inputResourceMem, context, inputResource ); result = calCtxGetMem( &outputResourceMem, context, outputResource ); // Copy the data CALevent syncEvent; result = calMemCopy( &syncEvent, context, inputResourceMem, outputResourceMem, 0 ); // the last parameter is flags, but according to the documentation, they are not used // Wait for the copy operation to complete while( calCtxIsEventDone( context, syncEvent ) == CAL_RESULT_PENDING );