Since sub-28nm manufacturing has become affordable and commercially feasible for large chips such as discrete graphics processors, both AMD and NVIDIA have replaced their legacy products with new generation chips that offer a completely different balance of performance and power. However, while it didn't take long for NVIDIA to fill all performance echelons with Pascal-based accelerators and surpass the performance records set during the Maxwell era, AMD focused its efforts on mid- and entry-level products, effectively eliminating competition in the high-end graphics segment.

Graphics cards based on the Polaris family of GPUs performed well as an affordable mainstream product and helped AMD's graphics division regain market position lost by the Radeon 300 series cards. However, AMD initially made it clear that Polaris, unlike previous architectures, would not evolve towards larger chips, like the Hawaii and then Fiji cores were in the 28 nm era.

Indeed, while Polaris has benefited in power efficiency from moving to the 14nm FinFET norm, it still shares many similarities with older AMD products (Tonga and Fiji) that prevented it from taking advantage of the advanced process technology as effectively. And while AMD has improved this by optimizing GPU manufacturing and design with the second-generation Polaris, the Radeon RX 500 series cards are still limited in clock speed potential and lack architectural advantages that could improve instruction-per-clock performance. Both the GCN microarchitecture that underpins AMD chips and the organization of blocks inside the GPU needed to be rethought, and this task fell on the upcoming Vega family.

To make sure there's no doubt that AMD will be back in the enthusiast GPU market, the company revealed quite a bit about what the Vega is up front, and released the Radeon RX Vega Frontier Edition accelerator in July. Today we can finally examine AMD’s mainstream gaming product based on next-generation silicon – the Radeon RX Vega 64.

⇡#AMD Vega architecture

NCU - Next-generation Compute Unit

The main building block in the Graphics Core Next architecture is the Compute Unit, which in this case is abbreviated NCU (Next-generation Compute Unit). Since GCN was first implemented more than five years ago, AMD has not made major changes to the CU design. As with previous iterations, Vega's NCU still contains 64 shader ALUs that are capable of 128 single-precision (FP32) operations per clock. The amount of L1 cache and shared memory inside the NCU has also remained unchanged since GCN 1.0. However, if in GCN version 1.3, which includes the Polaris chips, the CU only underwent optimizations that increase specific performance compared to the CU in GCN 1.2 (Tonga and Fiji), and the two versions of the architecture even remained compatible at the ISA level, the developers Vega introduced a lot of new instructions and data formats, due to which the fifth generation GCN can be regarded as the most profound transformation of the Graphics Core Next shader microarchitecture to date.

Like Polaris, Vega can perform half-precision float and integer operations (FP16), but Vega also adds support for 8-, 16-, and 32-bit precision integer operations. As for integer data formats with reduced bit depth, they already have a lot of applications. For example, the INT8 format is used for processing data using pre-trained machine learning networks (inference), and INT32 is used for calculating hashes in cryptographic algorithms (including cryptocurrency mining). On the other hand, the demand for operations on real numbers with half precision is not so obvious. The FP16 format is widely used for processing shaders in mobile graphics, but the unified shader model of desktop APIs initially made the choice in favor of FP32. However, FP16 may eventually find its way into desktop graphics for tasks that don't require full precision to maintain image quality, such as normal vectors, lighting values, and HDR.

But the most important thing is that the NCU as part of Vega is capable of combining operations of reduced bit capacity, thereby increasing throughput by a factor of several times. For example, instead of 128 operations per clock on FP32 numbers that a single NCU performs, 256 FP16 operations or 512 FP8 operations can be performed. The only other GPU that is endowed with such functions today is the GP100 from NVIDIA. Thus, Vega, in addition to a high-performance gaming architecture, is a universal solution for general-purpose calculations - in everything except double precision, because FP64 bandwidth is limited to 1/16 of FP32.

Tile rendering and support for Direct3D feature level 12_1

The next area where Vega has made big strides is in pixel throughput. Following NVIDIA, AMD uses tile-based rendering in Vega, a technology that is widely used in mobile graphics and reduces the number of accesses to data located outside the GPU cache. The Draw-Stream Binning Rasterizer (DSBR) mechanism in Vega works similarly.

Classic tile-based rendering, widely used in mobile GPUs, involves processing a frame in two passes. First, the driver divides the screen space into tiles (areas with a typical size of 16 × 16 or 32 × 32 pixels) and compiles an index of the polygons that are in the projection of each tile. Then, sequentially within each tile, the entire rendering procedure is performed - from transformation and intersection of polygons to filling textures and executing shaders - and the final result of all tiles is stitched into a single image. The advantage of this method is that any intermediate operations within a tile operate on a single data array, which is entirely placed in the GPU cache, and therefore the frequency of access to RAM is reduced.

However, the need for two passes to process the scene geometry itself consumes RAM bandwidth, since the GPU must first write information about the polygons that fall into a particular tile into external memory, and then retrieve it when rendering from tile to tile back. As a consequence, the effectiveness of tile-based rendering ultimately depends on whether the bandwidth savings in pixel fill rate outweigh the cost in two-pass geometry projection. In mobile applications with simple geometry, tile-based rendering is justified, but for modern desktop games, the standard method of instant rendering is better suited, in which sequential rasterization of one polygon after another takes place in a single screen space.

The implementation of tile rendering in Maxwell/Pascal and Vega chips is different. NVIDIA does not have a polygon sorting stage, since geometry transformation occurs in a single pass. AMD, on the contrary, performs sorting, but the clock cycle consumption for this operation is reduced by dynamically selecting the tile size and batch of primitives depending on the complexity of a particular scene.

Additionally, sorting and grouping primitives into batches is the most effective way to prevent pixel shaders from overlapping invisible pixels that are occluded by polygons closest to the screen plane. Vega places individual samples of pixels in a queue, which shows at what depth from the screen they are located, and since This queue has a finite size; it is advisable to use tile-based rendering in order to fit within its limits within a single tile.

Tile rendering in Vega chips does not require special support from applications and is activated at the video card driver level. According to AMD's internal testing, DSBR increases average frame rates in modern games by up to 10%, reduces memory bus bandwidth consumption by up to 33%, and has no impact on GPU power consumption. In professional CAD applications, the increase in frame rate due to DSBR can be doubled.

Vega boasts support for features provided by Direct3D level 12_1. In fact, among modern GPUs, Vega has the most complete set of functions, including a number of optional ones.

Optimized front-end

Polaris chips don't suffer from severe performance issues in the early stages of rendering, but compared to the competing architecture, AMD had some work to do in this area. Vega still contains one geometry processing unit per Shader Engine (the largest structure in the GPU circuit that integrates all stages of the rendering pipeline), but the developers found an opportunity to increase the front-end throughput limit from four to 17 primitives per clock. .

To achieve this, AMD introduced an alternative mode of operation for the geometry engine, in which some of the fixed functionality stages were replaced by programmable "primitive shaders" - just as in NVIDIA Pascal chips the geometry pipeline is partially programmable. In addition to the fact that primitive shaders themselves are executed more economically compared to similar stages of a fixed pipeline, they allow invisible primitives to be cut off at earlier stages. In the future, primitive shaders can be used for tessellation and many other functions, including simultaneous projection of the scene from different viewpoints and at different resolutions. It is not yet clear, however, whether activating Vega's programmable geometry pipeline requires any input from the application engine or whether this function is taken over by the driver.

In addition to directly optimizing the geometry pipeline, AMD has taken measures to ensure that the engines within the GPU are fully loaded. An additional block called Intelligent Workload Distributor (IWD) provides load balancing between multiple geometry engines, scheduling operations to minimize context changes, and grouping multiple instances of a small primitive into a single SIMD instruction.

High-Bandwidth Cache Controller

With Vega, AMD introduced an innovative memory organization in which the GPU operates in much the same way as a PC's central processor. In standard architecture, the GPU considers the contents of local RAM as a collection of structures corresponding to data of various types, be it textures, vertex arrays, etc. As a consequence, since these structures can be large, moving them from system memory to local memory significantly reduces rendering speed. Typically, application developers want to reserve as much local memory as possible and keep all the necessary data close to the GPU, although there are also methods such as Tiled Resources that can be used to load data from system memory in small chunks (similar to how technology works Mega Texture in id Software engines).

AMD offers a universal address space mechanism that has long been used in central processors. In it, the contents of local and remote memory, regardless of the type of resource, are divided into small “pages” that can be separately requested by the rendering pipeline, moved or copied closer or further from the GPU. In this case, the local RAM acts as a new cache level in addition to the L2 cache.

In addition to saving RAM, HBCC technology will allow you to more efficiently manage the amount of Flash memory in Radeon Pro accelerators and address up to 512 TB of virtual space. For consumer devices, this functionality is redundant, but will be in demand in a virtualized environment. The question remains open whether paged memory access can work at the driver level (the function itself is activated in Radeon Settings) or, on the contrary, the application must independently manage the movement of resources.

By the way, AMD has introduced additional virtualization functions, providing guest OS access (up to 16 sessions) to hardware video stream encoding and decoding units. The load planning between the three engines (graphics/computing, video encoding and decoding) is handled by a separate hardware unit.

⇡#GPU Vega 10

The only Vega family GPU that AMD has released so far has the same compute unit configuration as the Fiji processor: 4096 shader ALUs, 256 texture mapping units and 64 ROPs. However, the number of transistors in the chip increased from 8.9 to 12.5 billion. At the same time, thanks to the 14 nm FinFET process, the chip area was reduced from 596 to 486 mm2. Thus, Vega 10 is a 72% denser chip than Fiji, and even compared to Polaris, the area is used 5% more efficiently.

Some of the transistor budget that differentiates the Vega 10 from the Fiji is spent on double the L2 cache (4 vs. 2 MB in the Fiji) for the new rendering features described above, but the lion's share of the elements distributed in the GPU circuitry provide the basis for additional pipeline stages that AMD had to implement to ensure stable operation at higher clock speeds. However, the developers assured that additional stages appeared only in those areas where the benefits of high frequency outweigh the increased latency. Otherwise, more sophisticated methods were used, including reducing the length of internal connections or completely reworking certain functional blocks.

Vega's internal memory registers are built using static memory originally designed for Ryzen processors, which AMD says delivers 18% area savings, 43% lower power, and 8% lower latency compared to standard solutions.

By the way, ROPs inside Vega are now clients of the second level cache, not the memory controller. This will increase the performance of deferred rendering engines, since the result of a separate pass will be written directly to L2 instead of RAM and will be immediately available to the texture units for subsequent operations.

For intra-chip communications between the GPU itself and uncore components (RAM controller, PCI Express bus, multimedia unit, etc.), Vega uses the Infinity Fabric interface, which is also part of the Zen architecture processors. Thanks to it, AMD will be able to easily integrate the Vega core into next-generation APUs in the future.

The video stream decoding unit in Vega has not acquired any new functions compared to Polaris. It still decodes H.264 and H.265 at resolutions up to 3840 x 2160 at 120 Hz, but AMD has clarified the issue of hardware support for the VP9 codec, which was first announced for Polaris but not implemented in the driver up until today. It turns out that Vega uses a hybrid method, combining the resources of a dedicated unit, shader ALUs and the central processor.

But the encoding unit in Vega gained the ability to record video in H.264 format in 4K resolution at 60 Hz, while Polaris was limited to 30 Hz.

Vega again uses HBM memory, but since the second version of the technology allows for assemblies of up to 8 GB, AMD was able to simultaneously increase the amount of local GPU memory and simplify the design due to fewer chips and simplified wiring. The Vega 10 silicon combines the GPU die with two 4 GB HBM2 assemblies on a 2048-bit bus, but due to almost double the frequency of the HBM2, the processor retains raw memory bandwidth comparable to the characteristics of the Fiji.

⇡#Technical characteristics, delivery set, price

AMD presented three accelerators based on Vega 10, not counting the Radeon RX Vega Frontier Edition. The top model in the family is the Radeon RX Vega 64, available in air cooler and liquid cooling options. The number in the name indicates the 64 active NCUs in the fully unlocked chip. Since the Radeon R9 Fury X has the same configuration, RX Vega benefits from higher clock speeds in addition to pipeline optimizations.

The air-cooled Radeon RX Vega 64's base GPU clock is 1247 MHz, which isn't very encouraging when compared to the base clock of the GeForce GTX 1080 Ti (which is based on a comparable-sized GPU with even 500 million fewer transistors). However, the boost frequencies of the two video cards are quite comparable - 1546 and 1582 MHz, respectively. Moreover, in the case of Vega, AMD puts a different meaning into the concept of boost clock. Instead of the maximum frequency that the GPU is allowed, the number refers to the maximum frequency that the core can be guaranteed to reach in games, but the true limit lies even higher. Thus, AMD and NVIDIA now operate with similar indicators, which makes it easier to compare video cards based on their specifications, although NVIDIA still means by boost clock a certain average, and not the peak value that is observed in games.

The Radeon RX Vega 64 Liquid Cooled Edition is already quite consistent with the GeForce GTX 1080 Ti at the stated frequencies, but let's look at the power consumption of the new products: even if the “air” version of the Radeon RX Vega 64 exceeds the power of 250 W typical for top consumer video cards, then the Liquid Cooled power Edition reaches an absolutely insane 345 W from the standpoint of a single-chip accelerator.

Considering the scale of optimizations that the Vega 10 contains, the size of the chip and its power, it would be logical to expect the Radeon RX Vega 64 to perform at or above the level of the GeForce GTX 1080 Ti. At least in terms of theoretical throughput of FP32 operations, the Radeon RX Vega 64 is ahead of the competitor's top accelerator. But judging by the prices, AMD is not so confident in the potential of its flagship. Indeed, the air-cooled version will go on sale at a suggested price of $499, the same as the GeForce GTX 1080. The recommended price of the Radeon RX Vega 64 Liquid Cooled Edition is $699, which is the same as the current price of the GeForce GTX 1080 Ti.

| Manufacturer | AMD | |||||

| Model | Radeon R9 Fury X | Radeon RX 580 | Radeon RX Vega 64 Frontier Edition | Radeon RX Vega 56 | Radeon RX Vega 64 | Radeon RX Vega 64 Liquid Cooled Edition |

| GPU | ||||||

| Name | Fiji XT | Polaris 20 XTX | Vega 10XT | Vega 10 XL | Vega 10XT | Vega 10XT |

| Microarchitecture | GCN 1.2 | GCN 1.3 | GCN 1.4 | GCN 1.4 | GCN 1.4 | GCN 1.4 |

| Technical process, nm | 28 nm | 14 nm FinFET | 14 nm FinFET | 14 nm FinFET | 14 nm FinFET | 14 nm FinFET |

| Number of transistors, million | 8900 | 5700 | 12 500 | 12 500 | 12 500 | 12 500 |

| Clock frequency, MHz: Base Clock / Boost Clock | —/1050 | 1257/1340 | 1382/1600 | 1156/1471 | 1247/1546 | 1406/1677 |

| Number of shader ALUs | 4096 | 2304 | 4096 | 3584 | 4096 | 4096 |

| Number of texture mapping units | 256 | 144 | 256 | 256 | 256 | 256 |

| ROP number | 64 | 32 | 64 | 64 | 64 | 64 |

| RAM | ||||||

| Bus width, bits | 4096 | 256 | 2048 | 2048 | 2048 | 2048 |

| Chip type | H.B.M. | GDDR5 SDRAM | HBM2 | HBM2 | HBM2 | HBM2 |

| Clock frequency, MHz (bandwidth per contact, Mbit/s) | 500 (1000) | 2000 (8000) | 945 (1890) | 800 (1600) | 945 (1890) | 945 (1890) |

| Volume, MB | 4096 | 4096/8192 | 8096 | 8096 | 8096 | 8096 |

| I/O bus | PCI Express 3.0 x16 | PCI Express 3.0 x16 | PCI Express 3.0 x16 | PCI Express 3.0 x16 | PCI Express 3.0 x16 | PCI Express 3.0 x16 |

| Performance | ||||||

| Peak performance FP32, GFLOPS (based on maximum specified frequency) | 8602 | 6175 | 13107 | 10544 | 12665 | 13738 |

| Performance FP32/FP64 | 1/16 | 1/16 | 1/16 | 1/16 | 1/16 | 1/16 |

| RAM bandwidth, GB/s | 512 | 256 | 484 | 410 | 484 | 484 |

| Image output | ||||||

| Image output interfaces | HDMI 1.4a, DisplayPort 1.2 | HDMI 2.0, DisplayPort 1.3/1.4 | HDMI 2.0, DisplayPort 1.4 | HDMI 2.0, DisplayPort 1.4 | HDMI 2.0, DisplayPort 1.4 | HDMI 2.0, DisplayPort 1.4 |

| TDP, W | 275 | 185 | <300 | 210 | 295 | 345 |

| Retail price (USA, excluding tax), $ | 649 (recommended at the time of release) | 199/229 (recommended at the time of release) | 999/1499 (recommended at the time of release) | 399 (recommended at the time of release) | 499 (recommended at the time of release) | 699 (recommended at the time of release) |

| Retail price (Russia), rub. | ND | 13,449 / 15,299 (recommended at the time of release) | ND | ND | ND | ND |

Design and features of the video card

The appearance has not undergone any changes: before us is an absolute copy of the AMDRadeon RX VEGA 56 STRIX OC.

The only differences can be observed in the sticker on the rear reinforcing plate: the number in the name has been changed from 56 to 64, a different serial number is indicated, and the sticker is located from right to left, from bottom to top.

The thickness of the video card goes beyond two slots, which is not at all surprising. The same two eight-pin connectors are used as additional power sources.

The interface panel has a small perforation, which will facilitate the “leaving” of heated air from the system unit. It has two HDMI 2.0b video outputs, two DP 1.4 and one Dual-Link DVI connector.

⇡#Test stand, testing methodology

| Test bench configuration | |

| CPU | Intel Core i7-5960X @ 4 GHz (100 MHz × 40), constant frequency |

| Motherboard | ASUS RAMPAGE V EXTREME |

| RAM | Corsair Vengeance LPX, 2133 MHz, 4 × 4 GB |

| ROM | Intel SSD 520 240 GB + Crucial M550 512 GB |

| power unit | Corsair AX1200i, 1200 W |

| CPU cooling system | Thermalright Archon |

| Frame | CoolerMaster Test Bench V1.0 |

| Monitor | NEC EA244UHD |

| operating system | Windows 10 Pro x64 |

| Software for AMD GPUs | |

| All video cards | Radeon R9 Fury X, Radeon R9 580: Radeon Software Crimson ReLive Edition 17.6.2 Radeon RX Vega 64: 17.30.1051-Beta6a (Tesselation: Use application settings) |

| NVIDIA GPU software | |

| All video cards | GeForce Game Ready Driver 384.94 |

| Benchmarks: synthetic | |||

| Test | API | Permission | Full screen anti-aliasing |

| 3DMark Fire Strike | DirectX 11 (feature level 11_0) | 1920 × 1080 | Off |

| 3DMark Fire Strike Extreme | 2560 × 1440 | ||

| 3DMark Fire Strike Ultra | 3840 × 2160 | ||

| 3DMark Time Spy | DirectX 12 (feature level 11_0) | 2560 × 1440 | |

| Benchmarks: games | ||||

| Game (in order of release date) | API | Settings | Full screen anti-aliasing | |

| 1920 × 1080 / 2560 × 1440 | 3840 × 2160 | |||

| Crysis 3 + FRAPS | DirectX 11 | Max. quality. Start of the Swamp mission | MSAA 4x | Off |

| Metro: Last Light Redux, built-in benchmark | Max. quality | SSAA 4x | ||

| GTA V, built-in benchmark | Max. quality | MSAA 4x + FXAA + Reflection MSAA 4x | ||

| DiRT Rally, built-in benchmark | Max. quality | MSAA 4x | ||

| Rise of the Tomb Raider, built-in benchmark | DirectX 12 | Max. quality, VXAO off | SSAA 4x | |

| Tom Clancy's The Division, built-in benchmark | Max. Quality, HFTS off | SMAA 1x Ultra + TAA: Supersampling | TAA: Stabilization | |

| Ashes of the Singularity, built-in benchmark | Max. quality | MSAA 4x + TAA 4x | Off | |

| DOOM | Vulkan | Max. quality. Foundry Mission | TSSAA 8TX | |

| Total War: WARHAMMER built-in benchmark | DirectX 12 | Max. quality | MSAA 4x | |

| Deus Ex: Mankind Divided, built-in benchmark | Max. quality | MSAA 4x | ||

| Battlefield 1 + OCAT | Max. quality. Start of the Over the Top mission | TAA | ||

| Benchmarks: video decoding, computing | |

| Program | Settings |

| DXVA Checker, Decode Benchmark, H.264 | Files 1920 × 1080p (High Profile, L4.1), 3840 × 2160p (High Profile, L5.1). Microsoft H264 Video Decoder |

| DXVA Checker, Decode Benchmark, H.265 | Files 1920 × 1080p (Main Profile, L4.0), 3840 × 2160p (Main Profile, L5.0). Microsoft H265 Video Decoder |

| LuxMark 3.1 x64 | Hotel Lobby Scene (Complex Benchmark) |

| Sony Vegas Pro 13 | Sony benchmark for Vegas Pro 11, duration - 65 s, rendering in XDCAM EX, 1920 × 1080p 24 Hz |

| SiSoftware Sandra 2016 SP1, GPGPU Scientific Analysis | Open CL, FP32/FP64 |

| CompuBench CL Desktop Edition X64, Ocean Surface Simulation | — |

| CompuBench CL Desktop Edition X64, Particle Simulation— 64K | — |

Testing Radeon RX Vega 64. Comparison with GeForce GTX 1080 in DirectX 11 and DirectX 12

After numerous announcements and presentations, new top-end AMD video cards have finally entered the market. Previously, we looked at the architectural and technological features of the Radeon RX Vega. It's time for practical acquaintance and gaming tests. Let us remind you that the older model Radeon RX Vega 64 comes in two versions - air-cooled and water-cooled. At the same time, a video card with CBO also operates at increased frequencies, offering the highest possible performance based on the Vega 10 GPU. In this review, we will look at the regular version of the Radeon RX Vega 64 with standard cooling and frequencies. Let's see what the temperature characteristics of the video card are, test its overclocking capabilities and compare it with its main competitor, the GeForce GTX 1080.

AMD Radeon RX Vega 64

Radeon RX Vega 64 review units come in small black boxes.

Inside there is only a video card without any additional accessories.

Radeon RX Vega 64 looks very familiar, it looks like the reference cards of the Radeon RX 480 series - the same black case with pseudo-perforation and a large logo on the side. But the solid weight immediately makes it clear that this is a top-level product.

The length of the video card is less than 27 cm. There are no obvious identifiers with the model name on the reference version.

The entire reverse side is covered with a plate, and there is an additional cross-shaped plate above the graphics chip. There are some LEDs (GPU Tach) in the corner that act as a loading indicator. Adjacent switches allow you to change the color of the glow.

On the side there is a large illuminated Radeon inscription. In the same area on the edge of the board there is a small BIOS switch. The video card has two firmwares, which differ in power limits. This affects performance and operating temperatures. We will see the real difference based on the testing results.

Radeon RX Vega 64 has three full-length Display Port connectors and one HDMI. They got rid of DVI like the GeForce GTX 1080 Ti - this leaves more space for holes and improves hot air blowing.

Even for the simple “air” version of the Radeon RX Vega 64, an impressive TDP of 295 W is declared. To efficiently cool such a video adapter, you need a good cooling system. In appearance, this is a familiar turbine-type cooler. Its metal base acts as a heatsink for all important elements on the board. In this case, many thermal pads on a black base are designed to contact the transistors and chokes of the supply circuit. The GPU crystal is equipped with HBM2 memory blocks, and a copper pad is in contact with them.

The metal base provides a special seat for the main radiator with a window for the copper pad. The heatsink for the GPU cannot be called very large, but according to visual assessment it is larger than the heatsink in top GeForce video cards.

On the side there is a large radial fan with an impeller diameter of 75 mm. A special wall creates an insulated chamber and, together with a plastic cover, a directed air channel is created, inside which the radiator is located.

The latter is made up of a series of thin plates with a large copper evaporation chamber. This is a typical design for turbine type systems. The protruding copper pad serves to contact the surface of the graphics chip.

At one time, the single layout of the graphics chip and HBM memory made it possible to minimize the size of the Radeon R9 Fury X board. The new Radeon RX Vega graphics accelerators use a larger board. Many electronic elements are placed on the back side of the PCB, many SMD elements are included in the chip casing. External power is connected via two 8-pin connectors.

The processor is powered from a 12-phase power supply system (six channels with phase doubling on the IR 35217 controller).

Radeon RX Vega video adapters, following the Radeon R9 Fury, are the only products that offer a single GPU and memory layout on a single substrate. In this case, there are two HBM2 memory banks with a total capacity of 8 GB. The side face of such a single silicon device of three microcircuits is almost 3 cm.

Radeon RX Vega 64 Performance and Overclocking

AMD has slightly changed the frequency response system. Now the base value and the average game value of the Boost frequency are indicated. For Radeon RX Vega 64, these are 1274 and 1546 MHz, respectively. The maximum possible value reaches 1630 MHz, and this is the value displayed in the GPU-Z utility. The clock frequency of the memory banks is 945 MHz with an effective DDR frequency of 1890 MHz.

Let us remind you that there is also a Radeon RX Vega 64 Liquid Cooled with water cooling and increased frequencies: a base value of 1406 MHz with a Boost frequency of 1677 MHz.

Now let's talk about the temperature characteristics and frequencies in the gaming load. All tests were carried out on an open bench at an indoor temperature of 23–24 °C. Under these conditions, actual GPU frequencies were below 1500 MHz. In the Superposition benchmark test (Extreme mode), the main core frequency range was at 1490–1510 MHz, in the Tom Clancy's The Division test (2560×1440 Ultra) it was 1450–1500 MHz, which is illustrated below.

Frequencies are determined by reaching power limits or temperature limits. Therefore, increased load or severe heating may reduce frequencies. In particular, when moving to 4K mode, there is a noticeable slight drop in the average frequency level in Tom Clancy's The Division by 10-20 MHz.

Maximum core temperatures during gaming load are 79–80 °C, and fan speed is up to 2400 rpm. These are quite good figures, since the reference GeForce GTX 1080 has a temperature limit of about 82 °C. And thanks to the variable speed of the fans, the actual noise level can be assessed as moderate.

In the software settings you can switch to economical or turbo mode. These profiles have different power limits, which affects the resulting frequencies. There is also a second BIOS that has lower power limits programmed into it. And we can say that the second firmware provides for more economical operating modes. Let's see how a video card works with this BIOS.

In Tom Clancy's The Division, at a resolution of 2560x1440, the average frequencies drop to approximately 1430–1450 MHz; at a resolution of 3840x2160 it is already 1380–1410 MHz.

Along the way, we see a decrease in heating to 73 °C. In reality, peak temperatures may be higher. For clarity, let’s compare video cards on two BIOSes in the continuous Superposition benchmark test with Extreme settings. The lower left screenshot shows the characteristics based on the results of a 13-minute test with the main BIOS, the right screenshot shows work on the second BIOS with more economical parameters.

It is noteworthy that the decrease in boost frequencies for the core is small, and the peak temperature drops from 80 °C to 76 °C. There is also a slight reduction in fan speed, although the maximum values remain the same at 2400 rpm. Below we will compare the performance of the video card in these two modes to see the real differences in performance. For now, we can conclude that the Radeon RX Vega 64 card is not as hot as one might expect. Although the chip has an additional Hot Spot thermal sensor, data from which can be taken via GPU-Z. And under heavy load, at this point the peak temperature may exceed the base value by several degrees.

For clarity, we also provide a table with official data on power limits in different modes and with different firmware. Along the way, it also contains data for the top version of Liquid Cooled.

The difference between the two BIOS versions is about 20 W. Judging by the data, certain average values are indicated for gaming loads (remember the stated maximum of 295 W).

Temperature and noise characteristics are determined by parameters that are available for manual adjustment. All fine settings are present in the AMD Radeon Settings application in the following path: Games - Global Settings - Global WattMan (Global WattMan).

At the top of the tab there is a general regulator that limits the power and thereby affects the frequencies in Boost mode, reducing or increasing performance. The initial settings correspond to a balanced mode, there is an economical mode and a Turbo mode. The latter is not translated in the Russian version and is designated simply as “Regime”. This tab also has a monitoring panel where you can monitor all the main parameters in real time without using third-party software.

At the bottom of the panel there is a section for temperature settings, control of the power limit and fan speed. The screenshot shows its values with standard settings.

In the settings there is a target fan speed of 2400 rpm, and under load the video card operates at exactly this value. The target temperature is specified at 75 °C, but with a “faster” BIOS it is not maintained. Maximum temperature at 85 °C. The target values are key, the video card works in balance mode between them, trying not to go beyond the specified limits. Although in the case of the Radeon RX Vega 64, the main factor determining frequencies and performance is the power limit. If standard modes are not enough for you, you can try to more flexibly customize the video card for yourself, using all these parameters. However, manual settings must be done extremely carefully.

You need to approach the issue of overclocking with even greater caution. Global WattMan provides ample opportunities to control core and memory frequencies and allows you to control their voltages. However, any overclocking requires an increase in the power limit, which, together with an increase in frequencies, leads to a sharp increase in heating. In the Global WattMan settings, you can adjust the GPU frequency and HBM2 memory frequency. By default, voltages are controlled automatically, although manual control is also possible. In our experiments, it turned out that any slight increase in core frequency leads to a decrease in stability. And if the video card passed the test in one game, then the system could freeze on another test. And everything depended on the operating time and warm-up speed of the GPU. At some point, it seemed that we would have to abandon any overclocking and simply limit ourselves to increasing the power limit.

The solution was found by limiting the voltages. When unlocking GPU voltage control for the two core states, the default voltages are 1.15 and 1.2 V. We limited them to 1.14 and 1.175 V. The result was instantaneous, we managed to more or less stabilize the core overclock at +2. 5% in combination with memory acceleration up to 1050 (2100) MHz. All this was accompanied by an increase in the power limit to the maximum at maximum fan speed under load.

With these settings, the video card worked at the limit of standard cooling capabilities. It was possible to pass the tests only thanks to pauses between benchmarks. For example, the video card could not withstand seven repetitions of the Tom Clancy's The Division test, but if they were divided into two stages with a pause between them, then there were no problems. Of course, we cannot talk about the suitability of such overclocking for constant use, but we are interested in seeing the maximum capabilities of the Radeon RX Vega 64. Overclocking will also allow us to evaluate the potential of the Radeon RX Vega 64 Liquid Cooled.

With our installations, the peak frequency increased to 1672 MHz. The actual frequency in Boost mode remained at 1620–1630 MHz in almost all tests. That is, the final increase in frequency will not be 2.5%, but at the level of 8–10% relative to the nominal frequencies.

As a result, we have three working configurations of the video card: standard settings (Balanced) on two BIOS versions and overclocking. Let's compare the Radeon RX Vega 64 in these modes with the reference GeForce GTX 1080 video card, which was tested at nominal and overclocked.

Characteristics of tested video cards

The table shows the official specifications of video cards. The graphs show the full range of core frequencies, including base and maximum values. Actual Boost values fall within the specified range, and the overall frequency dynamics depend on the video card parameters in different modes, in particular, on the power limit.

| Video adapter | Radeon RX Vega 64 | GeForce GTX 1080 |

| Core | Vega 10 | GP104 |

| Number of transistors, million pieces | 12500 | 7200 |

| Technical process, nm | 14 | 16 |

| Core area, sq. mm | 486 | 314 |

| Number of stream processors | 4096 | 2560 |

| Number of texture blocks | 256 | 160 |

| Number of rendering units | 64 | 64 |

| Core frequency, MHz: Base-Boost | 1274–1546 | 1607–1733 |

| Memory bus, bit | 2048 | 256 |

| Memory type | HBM2 | GDDR5X |

| Memory frequency, MHz | 1890 | 10010 |

| Memory capacity, GB | 8 | 8 |

| Supported DirectX Version | 12_1 | 12.1 |

| Interface | PCI-E 3.0 | PCI-E 3.0 |

| Power, W | 295 | 180 |

Test bench

The test bench configuration is as follows:

- processor: Intel Core i7-6950X (3, [email protected] ,1 GHz);

- cooler: Noctua NH-D15 (two NF-A15 PWM fans, 140 mm, 1300 rpm);

- motherboard: MSI X99S MPower (Intel X99);

- memory: G.Skill F4-3200C14Q-32GTZ (4x8 GB, DDR4-3200, CL14-14-14-35);

- system disk: Intel SSD 520 Series 240GB (240 GB, SATA 6Gb/s);

- additional drive: Hitachi HDS721010CLA332 (1 TB, SATA 3Gb/s, 7200 rpm);

- power supply: Seasonic SS-750KM (750 W);

- monitor: ASUS PB278Q (2560x1440, 27″);

- operating system: Windows 10 Pro x64;

- AMD Crimson Edition driver 17.9.1;

- NVIDIA GeForce 385.41 driver.

Testing was carried out in resolutions of 2560x1440 with maximum graphics settings. Gaming applications are listed alphabetically, tests in specialized benchmarks are placed at the end of the list.

Testing methodology

Battlefield 4

Testing was carried out in the first mission after the wall was blown up. The run was repeated through a small area of dense vegetation before descending onto a large construction site. Frame rate was measured using Fraps.

All graphics settings are on Ultra, MSAA multisampling in 4x mode.

Battlefield 1

A new test scene has been selected. We usually use the game sequence at the beginning of the Cape Helles mission, but this recreates the extreme workload. Therefore, a simpler test scene was selected, but on a large map with a complex landscape. This is the beginning of the second mission of the "Forward Savoy!" story campaign. The same sequence of actions was carried out with shooting and throwing grenades. The duration of the test episode is 52 seconds. At least seven repetitions.

Ultra quality selected. Testing was carried out in DirectX 11 using Fraps and in DirectX 12 using the Mirillis Action utility!

Deus Ex: Mankind Divided

For testing, a built-in benchmark was used, which was run at least seven times.

The standard Ultra-quality profile is selected. Testing was carried out in two modes: when rendering in DirectX 11 and DirectX 12.

Fallout 4

Testing was carried out using Fraps immediately after leaving the shelter at the beginning of the game. There was a short walk around the surrounding area with an abundance of vegetation and rich rays of light. Scenes with such environments result in the most noticeable performance degradation. The procedure is shown below.

The maximum graphics quality profile is selected, and HBAO+ shading is additionally enabled. Frame rate limitation removed.

For Honor

For testing, the built-in gaming benchmark was used, which was launched at least seven times on each video card. To correctly take into account the minimum fps, Fraps was additionally used.

The standard profile of the maximum quality Very High was selected, which provides for the use of TAA anti-aliasing.

Gears of War 4

We used the built-in gaming benchmark, which was run 6-7 times.

The maximum graphics quality is Ultra, and additional DirectX 12 functions are activated (Async Compute, etc.).

Grand Theft Auto 5

The built-in benchmark was used for testing. Five reps. For a comprehensive assessment, the average fps was calculated based on the results of all test scenes. The minimum fps was measured using Fraps based on the results of a full benchmark run.

All basic graphics settings are at maximum, MSAA 4x anti-aliasing is enabled. Additional parameters are active - the range of loading detailed objects (Extended Distance Scaling) and the item “length of shadows” (Extended Shadows Distance) +100% to the base level.

Mass Effect: Andromeda

There was a short jog during the very first landing on the planet. Frame rate was measured by Fraps.

Ultra quality presets are selected, grain and chromatic aberration effects are active.

Prey (2017)

Performance was measured by Fraps while replaying a specific sequence of actions during the initial game phase where the hero must break glass. Seven repetitions.

The profile for the maximum graphics quality, Very High, is selected.

The Witcher 3: Wild Hunt

Testing was carried out using Fraps. FPS was measured during a trip along the road to the village of White Garden.

Maximum graphics settings are enabled, all post-processing effects and HBAO+ shading are active, and HairWorks technology is enabled.

Tom Clancy's Ghost Recon: Wildlands

For testing, a built-in gaming benchmark was used, which was run seven times.

Maximum Ultra quality presets are selected.

Tom Clancy's The Division

The built-in performance test was run at least seven times for each mode.

The maximum quality profile is selected. Additionally, those parameters that were not initially set to this level have been increased to the maximum (reflection quality, HBAO+ background shading, detail). Testing was carried out in two modes: when rendering in DirectX 11 and DirectX 12.

Warhammer 40000: Dawn of War III

New game in our tests. It has a built-in benchmark, which is used to compare performance. Additionally, the Fraps utility was used.

Maximum graphics quality is selected, all effects are enabled.

Superposition Benchmark

New benchmark from Unigine. I ran it 3-4 times at Extreme settings at 1920x1080 resolution.

3DMark Fire Strike

Fire Strike test from the latest 3DMark benchmark suite. Testing was carried out in Extreme (2560×1440) and Ultra (3840×2160) modes.

3DMark Time Spy

The new benchmark for DirectX 12 was launched with default settings.

Energy consumption

The results of measurements in seven annexes are presented:

- Deus Ex: Mankind Divided DX12;

- Gears of War 4;

- Grand Theft Auto 5;

- Tom Clancy's Ghost Recon: Wildlands;

- Tom Clancy's The Division DX12;

- Warhammer 40,000: Dawn of War III;

- Superposition Benchmark.

The peak values during each run were taken into account, based on which the average value was calculated based on the results of testing in both resolutions, and then the overall average value was calculated. The data was collected using a Cost Control 3000 device.

Test results

In the final performance charts, all Radeon RX 64 results are grouped together. The lower data corresponds to the indicators with the second BIOS, the middle data corresponds to the indicators with the main BIOS, the upper results correspond to overclocking.

Battlefield 4

Let's start studying the results with the old Battlefield 4. The game often shows better results on NVIDIA video adapters, and the Radeon RX Vega 64 fits into this picture, losing to the GeForce GTX 1080. The gap with the competitor is large, but the overall performance is still high. Switching to an economical BIOS or overclocking has little impact on the Radeon's results.

Battlefield 1

First, let's take a look at the results in DirectX 11.

In Battlefield 1, we see identical results from the Radeon RX Vega 64 with an economical BIOS and from the reference GeForce GTX 1080. With the main BIOS, the review hero is about 2% faster, overclocking gives another 6–8% faster. The competitor with higher frequencies is a little faster, but the difference is minimal.

Switching to DirectX 12 changes the picture. Radeon RX Vega 64 shows almost identical results in different versions of DirectX; the new API even shows a tendency for average fps to increase, but the difference is quite negligible. The GeForce GTX 1080 only loses performance when switching to DirectX 12, and even when overclocked, this video adapter is weaker than the Radeon RX Vega 64 in an economical configuration.

Deus Ex: Mankind Divided

Let's compare the performance in Mankind Divided with different versions of DirectX.

In this case, the transition from DirectX 11 to DirectX 12 provides a 3-5% speedup for the Radeon RX Vega 64, while the GeForce GTX 1080 loses about 1% in performance. In both modes, the Radeon RX Vega 64 in an economical configuration is faster than the GeForce GTX 1080 at nominal. In the second mode, the AMD flagship initially shows performance level of the GeForce GTX 1080 when overclocked.

Fallout 4

The average performance of the competitors is approximately at the same level, but the Radeon shows clear differences in performance. As a result, the Radeon RX Vega 64 loses to its competitor in Fallout 4 in terms of minimum fps.

For Honor

Sharp changes in performance are also noticeable in the standard For Honor benchmark. For this reason, we even had to resort to Fraps to fix some average minimum fps on the Radeon. And this parameter on Vega 64 does not actually change when switching BIOS and overclocking. The general situation is that the GeForce GTX 1080 is faster in all respects at nominal value. The AMD flagship manages to achieve a similar level of average fps when overclocked. Overclocking itself provides an acceleration of 6%.

Gears of War 4

The Radeon RX Vega 64 and GeForce GTX 1080 have similar performance in Gears of War 4. Only with the second “economical” BIOS, the new product clearly loses to its competitor. At the same time, the difference in performance between the two firmwares is up to 6%. Overclocking gives 7-9% acceleration.

Grand Theft Auto 5

In GTA 5, the new Radeon is seriously inferior to its competitor. Overclocking and changing any other parameters has minimal effect on reducing or increasing the performance of the Radeon RX Vega 64.

Mass Effect: Andromeda

Radeon RX Vega 64 is inferior to GeForce GTX 1080 by about 5% in Andromeda. A more economical mode provides a 1–2% reduction in performance. Acceleration from overclocking is more than 9%.

Prey (2017)

In the new shooter Prey, AMD's flagship again loses to its competitor NVIDIA. The lag is 7–10%, depending on the active BIOS of the AMD video card. Overclocking improves results by 9%.

The Witcher 3: Wild Hunt

Radeon RX Vega 64 pleases in The Witcher 3, outperforming the GeForce GTX 1080 by 3-5%. And the slight advantage remains even after both participants overclock. Overclocking itself provides a speedup of about 9%, and switching to a second BIOS results in a performance decrease of more than 4%.

Tom Clancy's Ghost Recon: Wildlands

In Ghost Recon: Wildlands, the AMD flagship is almost as good as the GeForce GTX 1080, the difference is noticeable mainly in the minimum fps. In acceleration, the opponent's advantage is more obvious. The Radeon RX Vega 64 has weaker performance scalability; overclocking speeds up the video adapter by 6%.

Tom Clancy's The Division

Another game that supports different DirectX, and we tested in the old and new API.

Based on the results, we can state the complete triumph of the Radeon RX Vega 64 in The Division. And if in DirectX 11 the advantage over the GeForce GTX 1080 is 5–7%, then when DirectX 12 is activated the gap is already 9–20%. When switching to a new API, the NVIDIA video adapter receives minimal acceleration, while Vega gains 5–15%, demonstrating the maximum increase in minimum fps.

Warhammer 40000: Dawn of War III

Another confident victory for the Radeon RX Vega 64. AMD's newcomer shows a level of performance unattainable for its competitor. Even in economy mode with the second BIOS, the newcomer is faster than the GeForce GTX 1080 at maximum frequencies. The overall performance is high for both participants, so a user of any video adapter will be able to comfortably play at a resolution of 2560x1440.

Superposition Benchmark

New benchmark based on the Unigine engine. Of the available APIs, the faster DirectX 11 was selected with the standard Full HD Extreme settings profile. For clarity, the final data is presented in the form of a total score and in the form of fps.

Superposition shows equality between the Radeon RX Vega 64 in a more economical configuration and the reference version of the GeForce GTX 1080. At the initial factory settings, the Radeon is slightly faster. A tiny advantage remains during overclocking.

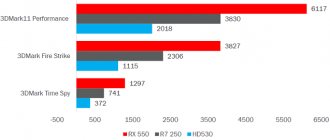

3DMark Fire Strike

In both test modes, the Radeon RX Vega 64 is faster than the GeForce GTX 1080. The average advantage is about 6%, if we consider the Radeon with the main BIOS.

3DMark Time Spy

The situation is slightly different in this benchmark. With the second BIOS, the AMD newcomer is weaker than its competitor, but with initial settings it is 1% better.

Gameplay comparison

Let's supplement the given data and conclusions with a comparison of Radeon RX Vega 64 and GeForce GTX 1080 in video format.

The video directly compares identical gameplay episodes in a resolution of 2560x1440, which will allow you to compare the dynamics between opponents. The video cards operated in nominal modes.

Energy consumption

The difference in power consumption is significant. The Radeon RX Vega 64 performs extremely well with any BIOS version. The increase in consumption during overclocking is also impressive. Moreover, on the graph you can see the average value based on the results of several control applications; in some games the readings exceeded 600 W.

conclusions

Radeon RX Vega 64 is the most advanced graphics accelerator from AMD and the successor to the Radeon R9 Fury X. It is the only gaming-grade video adapter with HBM2 memory. It also has a number of technological innovations, including a new memory organization system that allows you to work with external RAM, using the available 8 GB as a cache. Read more about this and other technological features in a special article.

In terms of gaming performance, the Radeon RX Vega 64 claims to be a competitor to the GeForce GTX 1080 and clearly cannot compete with the GeForce GTX 1080 Ti. According to the results of comparative testing, the Radeon RX Vega 64 outperforms the GeForce GTX 1080 in 7 out of 16 test applications. This is a landslide victory in Deus Ex: Mankind Divided, Warhammer 40000: Dawn of War III, The Division, 3DMark Fire Strike, a modest advantage in The Witcher 3 : Wild Hunt and Time Spy, victory in Battlefield 1 with DirectX 12. The GeForce GTX 1080 is generally stronger in nine applications, but the advantage is sometimes minimal. The Radeon RX Vega 64 performs better in most games running DirectX 12, seeing a steady increase in performance when switching to this mode. GeForce GTX 1080 in the same games, when switching from DirectX 11 to DirectX 12, receives minimal acceleration or even shows a drop in results. In this regard, the results in the same Battlefield 1 are especially indicative. Moreover, the Radeon RX Vega 64 is the first video card that, in our tests, did not demonstrate a drop in performance in this game under DirectX 12.

The disadvantages of Radeon RX Vega 64 include extremely high power consumption. It’s not just high compared to NVIDIA’s top solutions, it’s a record high for a single-chip video card as a whole. This raised concerns about increased heat and noise. But here the standard version of Vega 64 pleasantly surprised us. Its temperature and noise characteristics correspond to the level of other top video cards. Of course, a lot will depend on the proper organization of cooling inside the case. With normal ventilation, you will be able to game the Radeon RX Vega 64 at the standard fan speed of 2400 rpm.

Despite the obvious disadvantages of high power consumption and heat dissipation, AMD engineers managed to balance the final product well. I'm glad there are many options for choosing more economical modes. There are software economical profiles and an additional BIOS with a reduced power limit. If you need to reduce the heating of the video card, then switching to a second BIOS is the optimal solution - it will provide a real reduction in heating with a modest loss in performance of 2-5%.

The AMD Radeon Settings suite of software tools provides extensive customization options. This allows an experienced user to select his optimal settings. And it's all part of the standard AMD Radeon software functionality. Instead of lowering the parameters, you can overclock the video card. But this is an extremely dangerous activity with the regular version, which is equipped with air cooling. We do not recommend overclocking such Radeon RX Vega 64. The video card initially operates at the limit of its capabilities, and any overclocking will have a critical impact on heating and stability. But if you limit the maximum voltage and expand the power limit, you can get a certain acceleration. This is interesting for experimentation, but not suitable for practical use.

Based on the results of our overclocking testing, you can get a rough idea of the performance of the Radeon RX Vega 64 Liquid Cooled. The performance of the top modification will be close to our overclocked performance or slightly lower. Such a video card with its performance looks even more attractive against the background of the GeForce GTX 1080, and the presence of a cooling system neutralizes the problem of noise and heating. Unfortunately, this will not eliminate high power consumption. But the main problem still remains the inflated cost. After normalization of prices, the new Radeon RX Vega has a chance to take a strong position in the market.

This review is the first in a series of articles on Radeon RX Vega. An expanded version of testing will be released soon, with even more test applications and modes. Stay with us!

⇡#Performance: 3DMark

| 3DMark (Graphics Score) | |||||||

| Permission | AMD Radeon RX Vega 64 (1546/1890 MHz, 8 GB) | AMD Radeon RX 580 (1340/8000 MHz, 8 GB) | AMD Radeon R9 Fury X (1050/1000 MHz, 4 GB) | NVIDIA GeForce GTX TITAN X (1000/7012 MHz, 12 GB) | NVIDIA GeForce GTX 1080 (1607/10008 MHz, 8 GB) | NVIDIA GeForce GTX 1080 Ti (1480/11010 MHz, 11 GB) | |

| Fire Strike | 1920 × 1080 | 22 503 | 13 631 | 16 105 | 17 115 | 21 694 | 27 877 |

| Fire Strike Extreme | 2560 × 1440 | 10 711 | 6 090 | 7 559 | 7 928 | 10 264 | 13 681 |

| Fire Strike Ultra | 3840 × 2160 | 5 400 | 3 051 | 3 821 | 4 042 | 5 001 | 6 728 |

| Time Spy | 2560 × 1440 | 7 079 | 4 238 | 5 192 | 5 106 | 7 111 | 9 525 |

| Max. | −39% | −27% | −24% | +0% | +35% | ||

| Avg. | −42% | −28% | −26% | −4% | +28% | ||

| Min. | −44% | −29% | −28% | −7% | +24% | ||

AMD Radeon RX Vega 64 - theory

At launch, AMD is introducing its new top-tier GPU series in three variants. We tested the RX Vega 64 with classic air cooling. The cost of the card is around 35,000 rubles.

In addition, a version overclocked by approximately 10 percent with a liquid cooling system will also be available. The RX Vega 56, which is almost 7,000 rubles more affordable and has simplified specifications, complements the starting lineup of the Vega series.

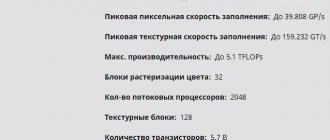

The sample we tested came straight from the AMD factory and serves as a so-called “reference design” that partners such as Sapphire, Gigabyte or Asus can focus on. The GPU, internally called Vega 10 by AMD and based on the GCN 5 architecture, contains more than 4096 shader units, which must be assembled into a total of 64 CUs (Compute Units).

In addition, there will be 256 texture units (TMU) and 64 render units (ROP). The card also offers 8 GB of very fast HBM2 memory, which will be connected to the graphics chip with a 2048-bit wide interface.

Clock speeds match expected performance. The base clock frequency is 1247 MHz; in Boost mode, if necessary, the card raises this figure to a maximum of 1546 MHz - this is without manual overclocking.

The water-cooled RX Vega 64 variant is designed for even higher frequencies. The so-called “Typical Board Power”, a parameter that describes the typical level of power consumption for gaming mode, is at 295 W. For comparison: the TDP, that is, the maximum consumption, of the Nvidia GeForce GTX 1080 is 180 W.

We have already run the air-cooled AMD Radeon RX Vega 64 reference model through an obstacle course in the form of benchmarks. However, during testing, our standard motherboard for such procedures “decided to live a long time,” and we were forced to quickly look for a replacement.

Because of this, all results cannot be called 100% comparable with others, so we decided not to include the new AMD card in our corresponding rating for now. But since the replacement motherboard is very close in its characteristics to the “original” one, in this article we will still present the results of a comparison with the GTX 1080.

Radeon RX Vega 64: top specification at launch

Of course, we will repeat the tests with our standard motherboard as soon as possible, and after that we will be sure to rank the new AMD graphics card accordingly.

⇡#Performance: games (1920 × 1080, 2560 × 1440)

| 1920 × 1080 | |||||||

| Full screen anti-aliasing | AMD Radeon RX Vega 64 (1546/1890 MHz, 8 GB) | AMD Radeon RX 580 (1340/8000 MHz, 8 GB) | AMD Radeon R9 Fury X (1050/1000 MHz, 4 GB) | NVIDIA GeForce GTX TITAN X (1000/7012 MHz, 12 GB) | NVIDIA GeForce GTX 1080 (1607/10008 MHz, 8 GB) | NVIDIA GeForce GTX 1080 Ti (1480/11010 MHz, 11 GB) | |

| Ashes of the Singularity | MSAA 4x + TAA 4x | 37 | 24 | 32 | 31 | 45 | 58 |

| Battlefield 1 | TAA | 131 | 82 | 91 | 85 | 115 | 141 |

| Crysis 3 | MSAA 4x | 65 | 44 | 61 | 66 | 79 | 115 |

| Deus Ex: Mankind Divided | MSAA 4x | 38 | 25 | 33 | 30 | 38 | 54 |

| DiRT Rally | MSAA 4x | 85 | 57 | 65 | 84 | 101 | 129 |

| DOOM | TSSAA 8TX | 200 | 138 | 166 | 151 | 200 | 200 |

| GTA V | MSAA 4x + FXAA + Reflection MSAA 4x | 64 | 45 | 55 | 67 | 84 | 93 |

| Metro: Last Light Redux | SSAA 4x | 87 | 51 | 69 | 74 | 92 | 124 |

| Rise of the Tomb Raider | SSAA 4x | 57 | 35 | 42 | 47 | 63 | 86 |

| Tom Clancy's The Division | SMAA 1x Ultra + TAA: Supersampling | 81 | 51 | 61 | 54 | 82 | 113 |

| Total War: WARHAMMER | MSAA 4x | 71 | 39 | 54 | 59 | 71 | 85 |

| Max. | −30% | −6% | +4% | +30% | +77% | ||

| Avg. | −36% | −19% | −16% | +9% | +39% | ||

| Min. | −45% | −31% | −35% | −12% | +0% | ||

| 2560 × 1440 | |||||||

| Full screen anti-aliasing | AMD Radeon RX Vega 64 (1546/1890 MHz, 8 GB) | AMD Radeon RX 580 (1340/8000 MHz, 8 GB) | AMD Radeon R9 Fury X (1050/1000 MHz, 4 GB) | NVIDIA GeForce GTX TITAN X (1000/7012 MHz, 12 GB) | NVIDIA GeForce GTX 1080 (1607/10008 MHz, 8 GB) | NVIDIA GeForce GTX 1080 Ti (1480/11010 MHz, 11 GB) | |

| Ashes of the Singularity | MSAA 4x + TAA 4x | 28 | 19 | 26 | 25 | 34 | 47 |

| Battlefield 1 | TAA | 97 | 59 | 62 | 62 | 82 | 102 |

| Crysis 3 | MSAA 4x | 41 | 27 | 39 | 41 | 53 | 73 |

| Deus Ex: Mankind Divided | MSAA 4x | 24 | 16 | 19 | 19 | 25 | 35 |

| DiRT Rally | MSAA 4x | 64 | 41 | 49 | 61 | 73 | 96 |

| DOOM | TSSAA 8TX | 147 | 89 | 110 | 100 | 136 | 178 |

| GTA V | MSAA 4x + FXAA + Reflection MSAA 4x | 48 | 31 | 39 | 48 | 63 | 81 |

| Metro: Last Light Redux | SSAA 4x | 50 | 28 | 41 | 44 | 52 | 73 |

| Rise of the Tomb Raider | SSAA 4x | 36 | 22 | 27 | 29 | 38 | 53 |

| Tom Clancy's The Division | SMAA 1x Ultra + TAA: Supersampling | 59 | 36 | 44 | 39 | 56 | 80 |

| Total War: WARHAMMER | MSAA 4x | 47 | 26 | 38 | 40 | 49 | 64 |

| Max. | −32% | −5% | +1% | +32% | +78% | ||

| Avg. | −38% | −20% | −17% | +8% | +46% | ||

| Min. | −45% | −36% | −36% | −15% | +5% | ||

⇡#Performance: games (3840 × 2160)

| 3840 × 2160 | |||||||

| Full screen anti-aliasing | AMD Radeon RX Vega 64 (1546/1890 MHz, 8 GB) | AMD Radeon RX 580 (1340/8000 MHz, 8 GB) | AMD Radeon R9 Fury X (1050/1000 MHz, 4 GB) | NVIDIA GeForce GTX TITAN X (1000/7012 MHz, 12 GB) | NVIDIA GeForce GTX 1080 (1607/10008 MHz, 8 GB) | NVIDIA GeForce GTX 1080 Ti (1480/11010 MHz, 11 GB) | |

| Ashes of the Singularity | Off | 45 | 28 | 37 | 31 | 44 | 59 |

| Battlefield 1 | 55 | 34 | 41 | 37 | 50 | 67 | |

| Crysis 3 | 32 | 20 | 28 | 31 | 36 | 50 | |

| Deus Ex: Mankind Divided | 28 | 17 | 15 | 21 | 28 | 38 | |

| DiRT Rally | 43 | 27 | 33 | 41 | 50 | 66 | |

| DOOM | 75 | 45 | 59 | 54 | 75 | 97 | |

| GTA V | 47 | 29 | 37 | 41 | 52 | 71 | |

| Metro: Last Light Redux | 43 | 26 | 37 | 39 | 47 | 65 | |

| Rise of the Tomb Raider | 42 | 26 | 30 | 35 | 44 | 62 | |

| Tom Clancy's The Division | TAA: Stabilization | 34 | 21 | 2 | 23 | 32 | 46 |

| Total War: WARHAMMER | Off | 39 | 24 | 32 | 31 | 39 | 52 |

| Max. | −37% | −13% | −3% | +16% | +56% | ||

| Avg. | −38% | −29% | −20% | +3% | +41% | ||

| Min. | −40% | −94% | −33% | −9% | +22% | ||

AMD Radeon RX Vega 64 - practice

As you'd expect, the RX Vega 64 delivers strong performance. For comparison, we took the MSI GeForce GTX 1080, which in the Founders Edition version is closest to the reference design.

In synthetic benchmarks, the cards are almost equivalent to each other. Thus, the AMD representative in 3DMark Cloud Gate receives 34967 points (GTX 1080: 34265 points) and 5267 points in Fire Strike Ultra (GTX 1080: 5029 points). To summarize, we can say that Radeon is a little better than GeForce.

In practice, we see a similar picture, and depending on the benchmark, one card or another has a slight advantage. In GTA V, Vega 64 runs at 101.70 fps in Full-HD resolution and 46.49 fps in 4K. GeForce performs much better: 142.1 fps (Full HD) and 55.4 fps (4K).

The opposite picture is observed in “Metro Last Light”: while the Radeon produces as much as 150.46 fps (Full-HD) and 57.12 Fps (4K), the GTX 1080 results are worse by 5-10 percent. Thus, it becomes obvious: Radeon RX Vega 64 and Geforce GTX 1080 are approximately at the same level in terms of performance.

Only if the game is optimized for a specific GPU manufacturer does the difference become more noticeable. Interesting fact: in the LuxMark OpenCL benchmark, the Radeon card gets 5275 points and thereby knocks out the GTX 1080 (2805 points).

Since many cryptocurrency mining utilities use the OpenCL programming interface, the RX Vega 64 may become an object of desire for miners, and because of this, the price of the new product may skyrocket.

Radeon RX Vega 64: extreme power consumption

So in the end, are GeForce and Radeon equal to each other in the top segment? Unfortunately not, as AMD suffers one major flaw that our testers were surprised to discover, reopening a dark chapter in the GPU maker's history that we thought had been closed since the days of Polaris.

Under full load, our test system with the Vega card consumed, depending on the operating scenario, from 100 to 200 W more than with the GeForce GTX 1080. It is completely incomprehensible to us why AMD released such a “glutton” that does not compensate for this disadvantage with any special advantages.

We also must not forget that the GeForce GTX 1080 has been on the market for a whole year, and this fact also does not give the new product any advantages when comparing performance levels. And since Nvidia also has the GeForce GTX 1080 Ti in its lineup, the crown of the kingdom of graphics cards still remains on the heads of the “greens”.

⇡#Performance: Computing

⇡#Clock frequencies, power consumption, temperature

The updated version of the WattMan utility for Radeon RX Vega contains three preset power profiles, with which you can either reduce power consumption or increase it relative to the nominal 100%. We conducted all performance tests of the Radeon RX Vega 64 in Turbo mode, which expands the GPU's power package by 15%. Under these conditions, the maximum frequency of Vega 10 that we observed in games was 1662 MHz, which is even higher than the boost clock level stated in the specifications - 1546 MHz. However, in most applications the stable frequencies are much lower. For example, in the game we use to measure power, Crysis 3, the GPU frequency fluctuates around 1478 MHz.

Printed circuit board

Disassembling the video card makes it clear that there are no differences with the AMDRadeon RX VEGA 56 STRIX OC. In order not to repeat ourselves and write obvious things, let’s consider what are the main differences between the heroine of the last review and the one being considered today.

And it opens after removing the massive cooling system.

In our review of ASUS Radeon RX VEGA 56 STRIX OC, we came across a Korean GPU package with SK hynix memory. The heroine of the review boasts Taiwanese roots in her heart, the use of HBM2 produced by Samsung, as well as filling with a compound.

The latter affected the appearance and heat dissipation.

The GPU is flush with the HBM2, providing better contact with the base of the cooling system.

⇡#Conclusions

Thanks to the tremendous work of engineers on the Vega architecture, AMD was able to return to the high-performance discrete graphics market. In many respects - die size, clock speeds and power consumption - the Vega 10 GPU looks like a legitimate competitor to NVIDIA's GP102, and these expectations were fully met in general-purpose computing tests. However, in gaming applications, AMD's new flagship can only compete with the GeForce GTX 1080. Moreover, despite all the differences between the Vega architecture and Polaris, the Radeon RX Vega 64 took the same position in relation to the GTX 1080 as the Radeon RX 480 once did. then - in relation to the GeForce GTX 1060. Namely, in ultra-high resolution (4K) one can recognize parity between the two video cards, but in less demanding modes, Vega’s position is not so stable, and although rivals exchanged blows in tests favorable to one or another graphics card architecture, in our selection of AAA games there are several projects in which Vega performs so inefficiently that the scales tip in favor of the GeForce GTX 1080.

PS Due to the extremely short deadline for preparing the review (we received the Radeon RX Vega 64 24 hours before publication), we were forced to conduct shortened testing and, in particular, did not touch upon such a topic as overclocking, but we promise to catch up in the near future.

For AMD fans: Sapphire Radeon RX 580 Pulse 8GBG)

The Radeon RX 580 is an excellent mid-range graphics card for gamers who want to play at Full-HD or QHD resolutions.

Of course, Ethereum miners caught wind of the good Compute performance and completely devastated the market.

Initially, the cost of the RX 580 was about 18,000 rubles, but now you will have to pay from 25,000 to 28,000 rubles for it. However, we hope those sky-high prices come down again soon, as the RX 580 is a top-end alternative for budget-conscious gamers.