The incredible excitement around the appearance of the first GeForce GTX 1080 video card on the new GP104 graphics processor of 16-nm Pascal architecture from NVIDIA has not yet subsided, and the current leader in 3D graphics is already releasing the next video card model - GeForce GTX 1070 .

It is based on the same GP104 chip, albeit in a somewhat stripped-down architectural form, has slightly lower GPU frequencies and regular GDDR5 memory. But thanks to its lower cost and predictably high overclocking potential, the GeForce GTX 1070 can become the most popular video card among game lovers. However, first things first.

Printed circuit board

The printed circuit board resembles a classic design - memory modules are soldered around the chip, and the power subsystem is allocated to the right side. Everything, including memory chips and power elements, is cooled by a solid metal plate on which the fan is located.

The new chip (GP104-400-A1) turned out to be very economical and does not require special attention when dissipating heat. The first thing that catches your eye is the absence of a metal frame around the GPU, which could protect the crystal from chips. GDDR5X memory modules are equipped with 8 microcircuits manufactured by Micron. The GPU power subsystem has five phases, another phase is allocated for memory. The transistors used are NTMFD4C85N manufactured by ON Semiconductor.

The back of the board is devoid of any significant elements. All memory chips are soldered on the front side.

However, if you look closely, you can find a PWM controller uP9511P manufactured by UPI, which controls the power.

Results of FPS measurements in games

Testing of the video card is carried out at a resolution of 3840×2160, graphics settings are at maximum.

| A game | Frames per second |

| Assassin's Creed Syndicate | 63 |

| Battlefield 1 | 66 |

| Dishonored 2 | 58 |

| Far Cry Primal | 51 |

| GTA V | 75 |

| Crysis 3 | 70 |

| Tom Clancy's The Division | 57 |

Tests of the GTX 1080 Ti in games have shown that the video adapter is capable of providing high performance in any modern game at maximum image settings.

What's new?

According to NVIDIA, the new architecture is revolutionary, but is it really so? The most striking innovation in the case of PASCAL is the transition to a new 16-nm process technology and the use of a new type of video memory, GDDR5X. All this made it possible to achieve a significant increase in the operating frequency of the GPU and memory bandwidth, which led to a noticeable increase in performance. Another important feature was the use of FinFET (Field Effect Transistor with Fin) - transistors, which resulted in a reduction in power consumption and an increase in efficiency. Despite this, the PASCAL architecture is more an evolution of Maxwell than something fundamentally new.

Interesting innovations also affected SLI. Now the company will pay more attention to the tandem of two accelerators, i.e. will try to optimize performance scaling as much as possible for just such a scenario. However, this does not mean that the use of three or even four video cards will now become impossible, far from it, the emphasis will simply be shifted towards the most popular option. It's no secret that only a few can afford a system of three or four video cards.

For fans of multi-monitor configurations and virtual reality helmets, NVIDIA has prepared a technology for simultaneous multi-projection ( Simultaneous Multi-Projection ). In essence, this optimization is designed to simultaneously calculate geometric data for several pre-prepared projections. What is it for? Firstly, to eliminate various distortions when using curved monitors or multi-display configurations. Second, it improves performance for similar use cases on your system. Few of us had the opportunity to try NVIDIA 3D Vision at one time, but those who had the chance noted a noticeable lack of performance, since the video card was subject to double the load when drawing images for each eye. With the introduction of SMP, this problem is eliminated.

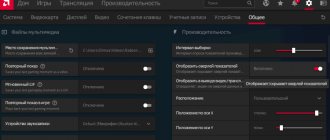

Also, one cannot fail to mention two more interesting innovations, which are entitled Ansel and Fast Sync :

Ansel is an invaluable tool for creating related gaming content, which will be useful for streamers and authors of various gaming blogs. During the game, you can press pause and adjust the camera to any desired angle to take a screenshot. In addition, you will have access to a number of effects to enhance the image, as well as the ability to take a photo with stunning clarity. In addition, it is possible to create 3D images, panoramic screenshots and 360° images. However, it should be noted that this will only be available in those games where appropriate support for this option will be introduced.

Fast Sync is a kind of addition to a function such as VSYNC. In general terms, activating this innovation will allow you to get rid of the delays that occur when you enable VSYNC. In addition, artifacts in the form of picture tearing are eliminated, which can be noticeable when vertical synchronization is turned off. This scenario is only valid if the FPS value exceeds the screen refresh rate threshold.

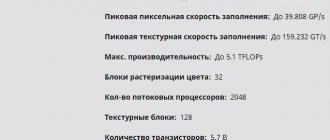

Specifications

Like the TITAN X, the GTX 1080 Ti is based on the GP102 GPU. Because of this, their characteristics are similar, but the memory capacity of the new product has been reduced from 12 to 11 GB. The reason for this lies in the reduced memory bus of 352 bits instead of 384. The slight difference in the amount of video memory is compensated by its increased frequency. The main characteristics of the Geforce GTX 1080 Ti are presented in the table below.

| Technical process, nm | 16 |

| Number of transistors, billion | 12 |

| Memory frequency, MHz | 11408 |

| Core frequency, MHz | 1480 |

| Video memory type | DDR5X |

| Video memory capacity, GB | 11 |

| Memory bus, bit | 352 |

| Energy consumption, W | 250 |

| Maximum temperature, C | 91 |

| Memory bandwidth, GB/s | 484 |

The impressive characteristics of the new video adapter significantly affect its price. Immediately after release, the price was about $800. But due to the significant increase in the popularity of cryptocurrency and the profitability of its mining, the demand for a video adapter has increased significantly. Accordingly, the price too. Now you can buy it for about $1,100.

How many watts does the 3070 need?

The declared power consumption of the video card is 220 Watts

, which results in official recommendations for a power supply of 650

watts

.

The RTX 3070

Founders Edition received a proprietary 12-pin power connector. The video card comes with the necessary 12-pin to 8-pin adapter cable.

Interesting materials:

How to set the time on your computer correctly? How to install WhatsApp on a tablet correctly? How to properly install protective glass on a tablet? How to allow APK installation? How to allow installation from unknown sources in Android 10? How to allow installation from unknown sources in iOS? How to allow installation from unknown sources in Windows 10? How to install Windows 10 yourself? How to remove the ban on installing Android programs? How to save data before factory reset?

Overclocking

When experimenting with overclocking, it is worth initially increasing the permissible power limit. The adapter allows you to increase Power Limit up to 120%. The base frequency of the GPU was increased to 1642 MHz.

After overclocking, the GPU accelerated to 1923–1974 MHz under real game load. Monitoring utilities record a peak of 2075 MHz, but these are only isolated bursts.

The actual operating frequency of the GP102 is close to 2000 MHz. At the same time, the dynamic auto-acceleration mechanism does not allow you to consistently exceed this symbolic threshold. As the GPU clock speed increases, both power consumption and chip heating increase. According to the algorithm, the video card tries to keep the temperature of the graphics processor within specified limits. After overclocking, the chip warms up to 85 degrees, while the fan speed exceeds 3000 rpm.

Despite the fact that formally the base GPU frequency has been increased by 10.9%, the actual operating frequencies will be lower. GPU Boost has to control the heating of the chip and its power consumption, preventing it from accelerating beyond normal.

Memory chips were also able to be further accelerated. The chips operated stably at an effective 12112 MHz (+10%).

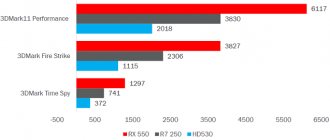

Positioning

Before you start looking at the video card, you need to decide on the profile of a potential buyer. Who is the GTX 1080 primarily aimed at? First of all, for those who are not ready to compromise and want to fully enjoy games on WQXGA or UHD monitors. Also, the new product will come at a good time for advanced streamers, enthusiasts, overclockers and other contingents who are still different from the mass consumer and know exactly what they want. At the same time, taking into account the current price of 55-60 thousand rubles, the circle of future owners is narrowing even more and talking about a “people's card” is simply not appropriate here. Alas, despite such impressive performance and a pleasant bonus for overclocking, only a few in our country will be able to afford such a graphics accelerator.

Cooling system

The reference design of the cooling system has not undergone any significant changes. This is still a turbine-type cooling system, which allows hot air to be thrown outside the system unit. This type of design is justified when used in compact systems, and is also suitable when building a system based on several graphics accelerators.

The main heatsink only removes heat from the graphics chip. It is based on the principle of an evaporation chamber. The role of the active part of the CO is performed by a cylindrical fan, which is equipped with a number of optimizations to reduce noise levels.

Video cards with an alternative cooling system often skimp on the backplate, which, coupled with the non-standard color of the printed circuit board, does not always look as we would like. Especially if you're trying to put together a neat build that adheres to a color scheme. However, in the case of Founders Edition, you don't have to worry about this. The backplate is divided into two equal parts and essentially does not participate in the cooling process; here it plays the role of a stiffener.

Power supply for GEFORCE GTX 1070 and GTX 1070 Ti

In order to find out which power supply is suitable for the NVIDIA GEFORCE GTX 1070 and GTX 1070 Ti video cards, we need to study the specifications on the NVIDIA website. According to official specifications, this video card consumes 150-180 W of power. Moreover, this figure is the same for both the regular version and the improved version with the Ti prefix.

Also in the characteristics of the video card, NVIDIA gives recommendations on the power of the power supply. In this case, for both versions of the GTX 1070, the recommended power supply is 500 W.

You can check the recommended power supply power specified by NVIDIA using the online power supply calculator. These calculators allow you to roughly estimate the power of the power supply based on the components used. In our case, we will use a calculator from MSI. We will specify an Intel Core i9 9900KF processor, one GEFORCE GTX 1070 Ti video card, 2 hard drives, 4 RAM modules, 4 fans, 4 additional PCI express cards and 1 DVD drive. With such components, the MSI calculator recommends a little more than 450 W.

In this case, the MSI calculator gave a result of 50 W less than NVIDIA recommends. Therefore, for maximum reliability you need to focus on a larger value.

Thus, we can conclude that for normal operation of the NVIDIA GEFORCE GTX 1070 or 1070 Ti video card, a 500 W power supply is required. If you want to leave room for an upgrade or just want to play it safe, then you can install a power supply with some margin. For example, you can purchase a model that will produce 100 W more. Thus, for the GTX 1070 and GTX 1070 Ti, the PSU power with a reserve will be 600 W.

⇡#Temperature and power consumption

As a rule, in all our tests, the temperature of AMD GPUs turns out to be lower than the GPU temperature of equivalent NVIDIA accelerators, if the reference cooling system is installed. In this case the situation is different. In office mode, the temperature of the GeForce GTX 580 GPU is on the same level as the Radeon HD 5870 GPU; in gaming mode, the GF110 is only 4 degrees hotter, which is not bad, since the less productive GF100 warmed up to 94 degrees Celsius under similar conditions!

To obtain a similar result, as we said above, NVIDIA engineers not only used a new cooling system, the operating principle of which is identical to the operating principle of the Radeon HD 5970, but also carried out a serious “transistor” upgrade of the GPU. Please note that throughout the testing process, the noise of the GeForce GTX 580 cooling system did not stand out against the background noise of other system components. From our point of view, this is a very good result, especially considering NVIDIA’s past “merits” in terms of GPU heating and the noise level of standard cooling systems. Let's see how things are with the GTX 580's appetite.

In typical power consumption modes, the GeForce GTX 580 is also in perfect order. Yes, the Radeon HD 5870 is still more economical, but the difference between the GTX 580 and HD 5870 is so insignificant that in this case it can be considered a draw.

Unlike the GeForce GTX 480, which, although it is ahead of the Radeon HD 5870, does not do so very confidently, the GeForce GTX 580 accelerator breaks away from its competitor quite noticeably. Moreover, even without overclocking, the GeForce GTX 580 is dangerously close to AMD's flagship, the Radeon HD 5970 accelerator.

As we have repeatedly noted, the architecture of the GF100 (and therefore the GF110) is optimized for ultra-heavy duty operation. In modes without anti-aliasing, the Radeon HD 5970 has a significant lead over all other accelerators, but as soon as full-screen anti-aliasing is turned on, AMD's two-chip flagship begins to lose ground, not much ahead of the unoverclocked GeForce GTX 580 accelerator in a resolution of 1680×1050 and lagging behind it when working with resolution 1920x1200.

As you know, the racing simulator Colin McRae DiRT 2 was one of the first games to support DirectX 11 and tessellation, the use of which in this game is not always visible, and during the race its effect is hardly discernible. However, even despite the low degree of tessellation, the GeForce GTX 580 looks very confident, outperforming even the Radeon HD 5970, regardless of the selected mode. Of course, even in the most difficult mode, DiRT 2 works great on the Radeon HD 5870, and even more so on the Radeon HD 5970, but the frame counter stubbornly shows the superiority of the representatives of the green camp, in this test the GeForce GTX 580 wins a convincing victory. Let's see what will happen next.

The cult Crysis, which became almost a standard in terms of graphics for several years to come. So far, in terms of picture quality, this project can easily compete with many games released this year. In this case, the GeForce GTX 580 is inferior to the older accelerator based on the Cypress GPU and is only slightly ahead of the single-chip Radeon HD 5870.

The advantage of the GeForce GTX 580 accelerator over its predecessor GeForce GTX 480 is about 15%, but this is quite enough to beat the Radeon HD 5970 even without overclocking! Excellent result.

In the game Just Cause 2, the new NVIDIA flagship is ahead of the GeForce GTX 480 by about 10-15%, depending on the graphics mode used, in this case this advantage is only enough to compete with the Radeon HD 5870, while the HD 5970 is beyond reach.

The GeForce GTX 580 accelerator in the game Alien versus Predator easily beats the Radeon HD 5870 even in relatively “simple” modes without anti-aliasing and anisotropic filtering, but even after overclocking, the NVIDIA flagship was unable to catch up, let alone overtake the Radeon HD 5970.

The official Final Fantasy XIV benchmark does not treat multi-chip solutions in the best way and, judging by the results, ignores the second GPU Radeon HD 5970. It is worth remembering that the clock frequencies of the flagship AMD accelerator are reduced relative to the values set for the Radeon HD 5870 - this is what explains the loss of the Radeon HD 5970 to AMD's cheaper solution. As for NVIDIA products, the results at 1280x720 resolution are apparently limited by the power of the central processor, which is not able to “pump” data not only to the GeForce GTX 480, but even more so to the GeForce GTX 580. As a result, at low resolution the Radeon HD 5870 is in the lead, and at 1920x1080 resolution the victory goes to the GTX 580, which after overclocking added very few points. This may again be due to insufficient CPU performance.

In the game Metro 2033, the developers paid a lot of attention to the details of the virtual world. Even the cables on the walls are made in the form of full-fledged 3D models, and not textures, as was the case before. The Metro 2033 engine is very demanding on GPU resources, especially in terms of geometry processing speed and tessellation. In light modes, the Radeon HD 5970 is very slightly ahead of the GeForce GTX 580, but with the transition to super-heavy modes, the new product from NVIDIA looks more preferable than the HD 5970. Moreover, even the GTX 480 shows itself, showing better results than the Radeon HD 5970.

Lost Planet 2's engine shows a similar picture. The Radeon HD 5970 accelerator turns out to be slightly faster than the GeForce GTX 480 or performs on equal terms with it. But the main character of our review, the GeForce GTX 580, is ahead of all representatives of the red camp in this test.

The Unigine Heaven 2.0 test suite is one of the few applications that can capture the beauty of complex tessellation mode. To understand what we are talking about, check out the separate material on tessellation. So, it has long been noted that one of the weak points in the Cypress GPU architecture is the insufficiently powerful tessellation unit. This is clearly demonstrated by the graphs, from which it can be seen that even the Radeon HD 5970 turns out to be weaker than the GeForce GTX 480, and the difference in results is quite significant. For modern flagship Cypress GPUs from AMD, the situation is unlikely to change, but future GPUs codenamed Cayman should receive much more powerful tessellation units and this is readily believed, judging by how the average-cost AMD Radeon HD 6870 video card has increased in speed .

Before the arcade simulator HAWX 2 hit the store shelves, the benchmark from this game, released just on the day of the announcement of the Radeon HD 6850/6870, managed to make a lot of noise. The cause of the unrest was the release of an unfinished beta version of the HAWX 2 test package. Many immediately saw this as NVIDIA's attempts to weaken public interest in the Radeon HD 6850/6870. We will not judge NVIDIA for this, especially since the full version of the HAWX 2 benchmark has now been released, where the DirectX 11 mode, like tessellation, works - it’s time to get tested. Unfortunately, even the fastest AMD accelerator is inferior to the GTX 580, and the difference is almost twofold. As far as we know, AMD is preparing a number of amendments for its drivers in order to minimize the lag in this test from NVIDIA GPUs.

As a small addition, we note that the results of tessellation in this game are visible to the naked eye. Below we present screenshots taken from the official NVIDIA presentation. They clearly show differences in the quality of relief rendering.

The first thing that catches your eye when looking at the test results in the game Mafia II is the tiny gap between the Radeon HD 5970 and the Radeon HD 5870. Of course, the Radeon HD 5970 will not have a double or even close to a double gap over its younger brother, however, 10 % difference is too small. Reinstalling the drivers, unfortunately, did not bring any results; we can only hope for future versions of the drivers. Well, for now, the Radeon HD 5870 and Radeon HD 5970 accelerators in the game Mafia II can compete only with the GeForce GTX 480, winning in some places and losing in others to the former king among NVIDIA solutions. Standing apart, so to speak, is the GeForce GTX 580, which, by and large, does not need overclocking to defeat its competitors.